Welcome to my Research page! I’m currently a 4th Year Ph.D. student with the LIS Group within MIT CSAIL. I’m officially advised by Leslie Kaelbling and Tomás Lozano-Pérez, and have the pleasure of collaborating with many other wonderful people within CSAIL’s Embodied Intelligence Initiative. I’m extremely grateful for support from the NSF Graduate Research Fellowship.

Resume | CV | Google Scholar | Bio | GitHub | Twitter

Research Areas

I’m broadly interested in enabling robots to operate robustly in long-horizon, multi-task settings so that they can accomplish tasks like multi-object manipulation, cooking, or even performing household chores. To this end, I’m interested in neurosymbolic methods that combine classical AI planning, reasoning and constraint satisfaction with modern machine learning techniques. My research draws on ideas from task and motion planning (TAMP), reinforcement learning, foundation models, deep learning, and program synthesis.

Publications

Conference Papers

|

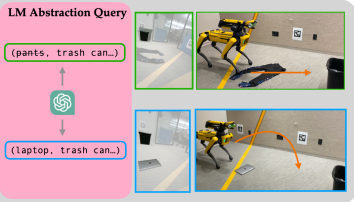

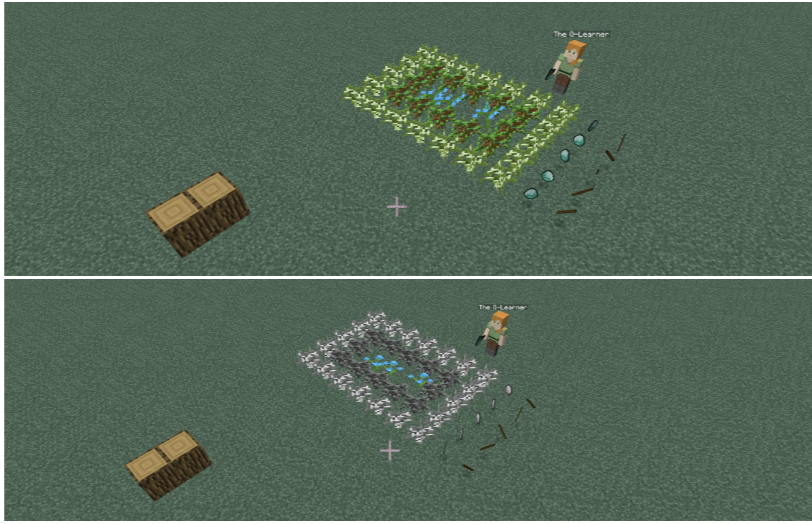

Practice Makes Perfect: Planning to Learn Skill Parameter Policies Nishanth Kumar*, Tom Silver*, Willie McClinton, Linfeng Zhao, Stephen Proulx, Tomás Lozano-Pérez, Leslie Pack Kaelbling, Jennifer Barry.RSS, 2024. website / arxiv

Introduces a new framework for robots to improve performance by efficiently learning online during deployment. Demonstrates that the proposed approach and framework can be implemented on a real robot and help significantly improve its ability to solve complex, long-horizon tasks in the real-world with no human intervention or environment resets!

|

|

Preference-Conditioned Language-Guided Abstractions Andi Peng, Andreea Bobu, Belinda Z. Li, Theodore R. Sumers, Ilia Sucholotsky, Nishanth Kumar, Thomas L. Griffiths, Julie A. Shah.Human-Robot Interaction (HRI), 2024. paper Introduces a method for enabling a robot to learn state abstractions for solving tasks that are tailored to a human's specific preferences. |

|

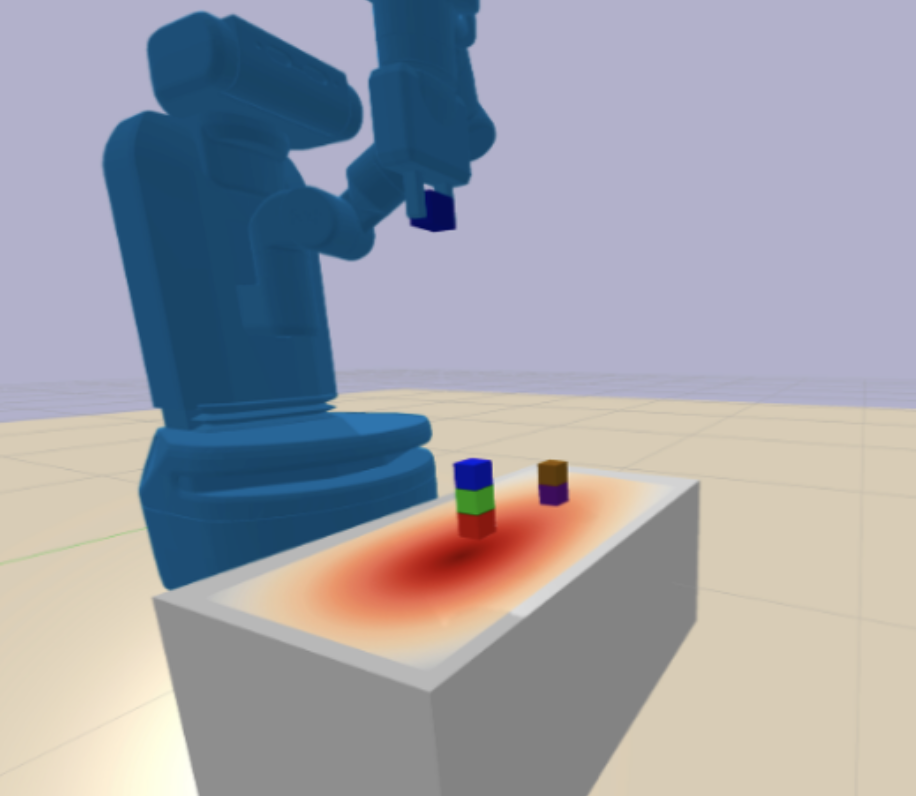

Learning Efficient Abstract Planning Models that Choose What to Predict Nishanth Kumar*, Willie McClinton*, Rohan Chitnis, Tom Silver, Tomás Lozano-Pérez, Leslie Pack Kaelbling.Conference on Robot Learning (CoRL), 2023. Also appeared at RSS Workshop on Learning for Task and Motion Planning (Best Paper Award) .website / OpenReview

Shows that existing methods of learning operators for TAMP/bilevel planning struggle in complex environments with large numbers of objects. Introduces a novel operator learning approach based on local search that encourages operators to only model changes *necessary* for high-level search.

|

|

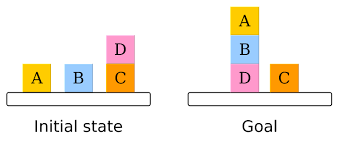

Predicate Invention for Bilevel Planning Tom Silver*, Rohan Chitnis*, Nishanth Kumar, Willie McClinton, Tomás Lozano-Pérez, Leslie Pack Kaelbling, Joshua TenenbaumAAAI, 2023 (Oral) . Also appeared at RLDM, 2022(Spotlight Talk) .arxiv / code

Introduces a new, program-synthesis inspired approach for learning neuro-symbolic relational state and action abstractions (predicates and operators) from demonstrations. The abstractions are explicitly optimized for effective and efficient bilevel planning.

|

|

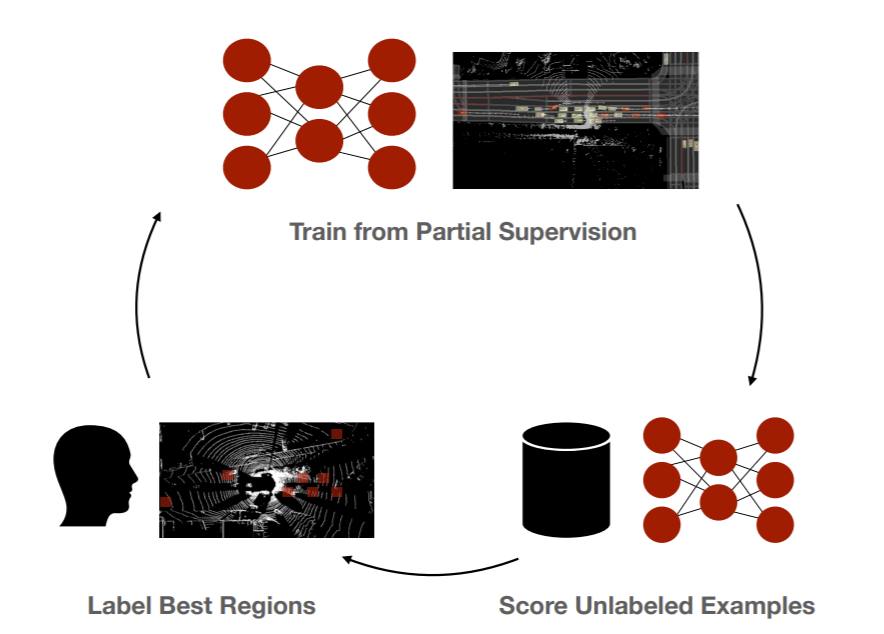

Just Label What You Need: Fine-Grained Active Selection for Perception and Prediction through Partially Labeled Scenes Nishanth Kumar*, Sean Segal*, Sergio Casas, Mengye Ren, Jingkang Wang, Raquel UrtasunConference on Robot Learning (CoRL), 2021. OpenReview / arXiv / poster Introduces fine-grained active selection via partial labeling for efficient labeling for perception and prediction. |

|

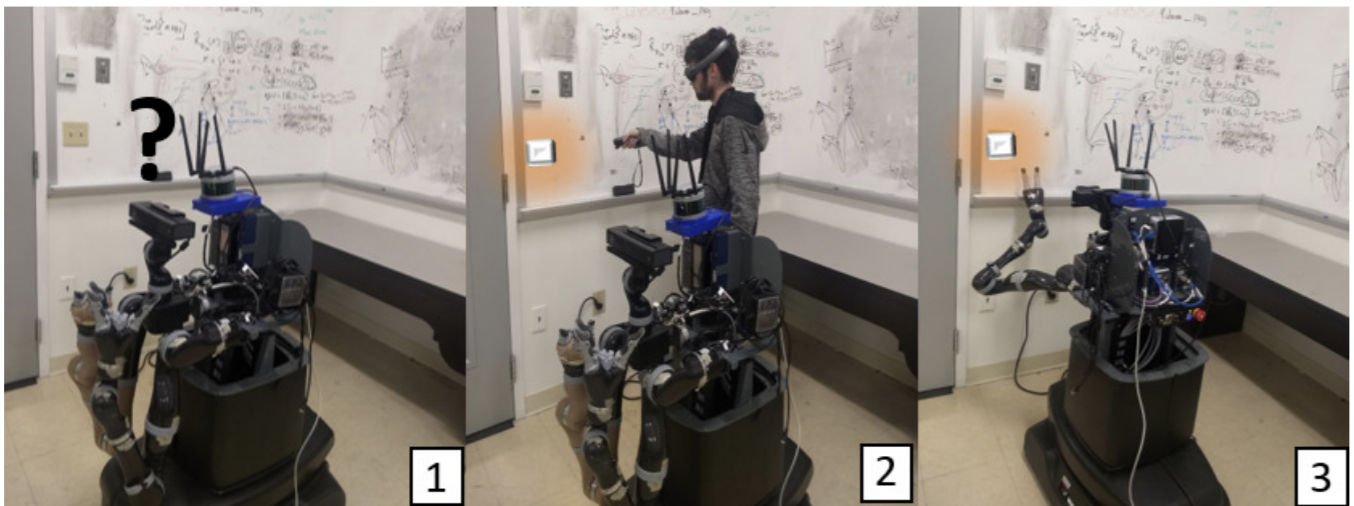

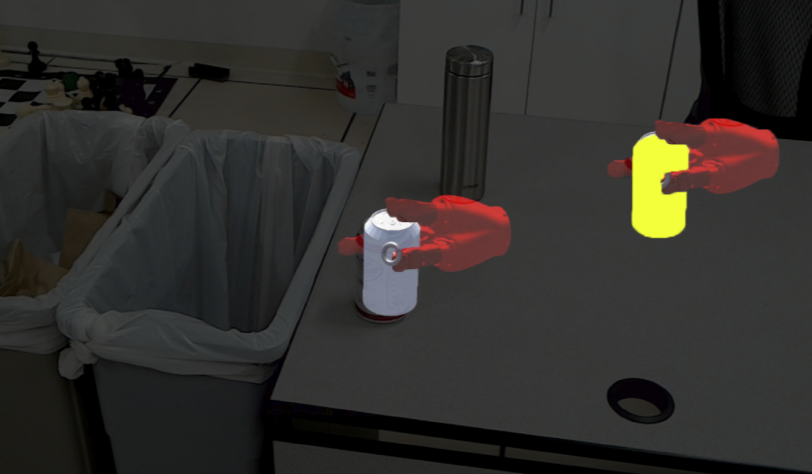

Building Plannable Representations with Mixed Reality Eric Rosen, Nishanth Kumar, Nakul Gopalan, Daniel Ullman, George Konidaris, Stefanie TellexIEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020. paper / video Introduces Action-Oriented Semantic Maps (AOSM's) and a system to specify these with mixed reality, which robots can use to perform a wide-variety of household tasks. |

|

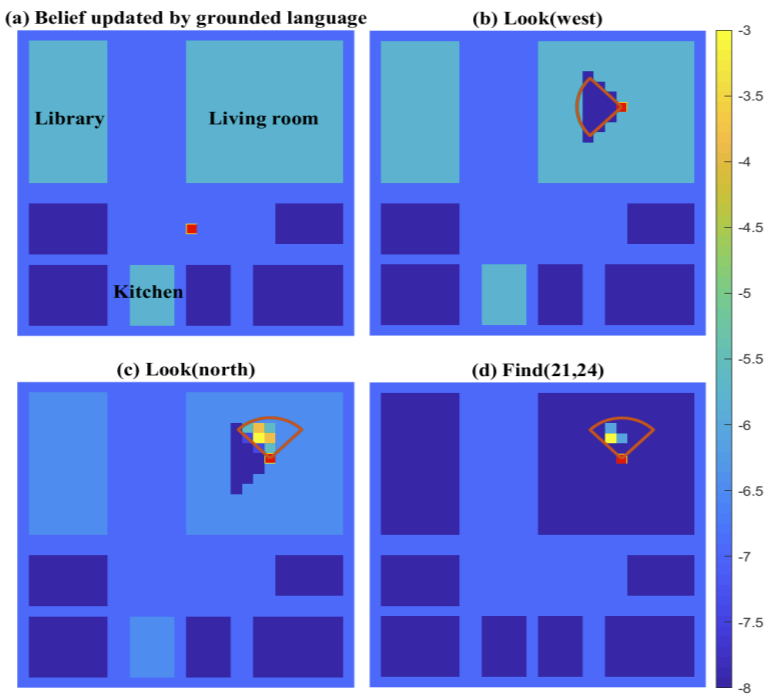

Multi-Object Search using Object-Oriented POMDPs Arthur Wandzel, Yoonseon Oh, Michael Fishman, Nishanth Kumar, Lawson L.S Wong, Stefanie TellexIEEE International Conference on Robotics and Automation (ICRA), 2019. paper / video Introduces the Object-Oriented Partially Observable Monte-Carlo Planning (OO-POMCP) algorithm for efficiently solving Object-Oriented Partially Observable Markov Decision Processes (OO-POMDPs) and shows how this can enable a robot to efficiently find multiple objects in a home environment. |

Workshop Papers and Extended Abstracts

|

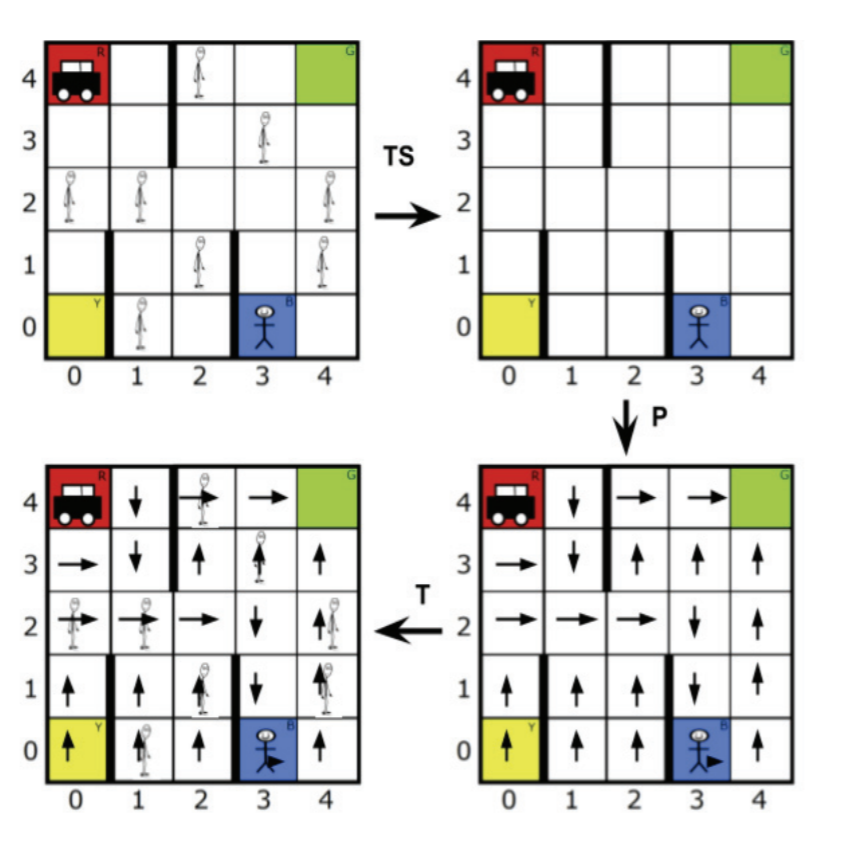

Task Scoping: Generating Task-Specific Simplifications of Open-Scope Planning Problems Michael Fishman*, Nishanth Kumar*, Cameron Allen, Natasha Danas, Michael Littman, Stefanie Tellex, George KonidarisIJCAI Workshop on Bridging the Gap Between AI Planning and Reinforcement Learning, 2023. paper

Introduces a method for how large classical planning problems can be efficiently pruned to exclude states and actions that are irrelevant to a particular goal so that agents can solve very large, 'open-scope' domains that are capable of supporting multiple goals.

|

|

PDDL Planning with Pretrained Large Language Models Tom Silver*, Varun Hariprasad*, Reece Shuttleworth*, Nishanth Kumar, Tomás Lozano-Pérez, Leslie Pack Kaelbling,NeurIPS FMDM Workshop, 2022. OpenReview

Investigates the extent to which pretrained language models can solve PDDL planning problems on their own, or be used to guide a planner.

|

|

Task Scoping for Efficient Planning in Open Worlds Nishanth Kumar*, Michael Fishman*, Natasha Danas, Michael Littman, Stefanie Tellex, George KonidarisAAAI Conference on Artificial Intelligence, Student Workshop, 2020. paper

Introduces high-level ideas for how large Markov Decision Processes (MPDs) might be efficiently pruned to include only states and actions relevant to a particular reward function. This paper is subsumed by our arxiv preprint on task scoping.

|

|

Knowledge Acquisition for Robots through Mixed Reality Head-Mounted Displays Nishanth Kumar*, Eric Rosen*, Stefanie TellexThe Second International Workshop on Virtual, Augmented and Mixed Reality for Human Robot Interaction, 2019. paper

Sketches high level ideas for how a mixed reality system might enable users to specify information for a robot to perform pick-place and other household tasks. This work is subsumed by our AOSM work.

|

Preprints

|

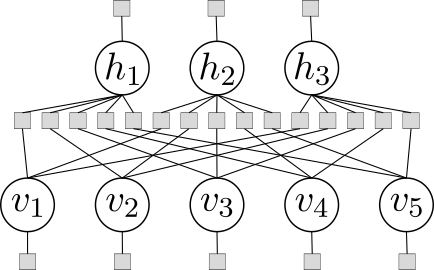

PGMax: Factor Graphs for Discrete Probabilistic Graphical Models and Loopy Belief Propagation in JAX Guangyao Zhou*, Nishanth Kumar*, Miguel Lázaro-Gredilla, Shrinu Kushagra, Dileep GeorgearXiv, 2022. arxiv / blog post / code

Introduces a new JAX-based framework that aims to make it easy to build and run inference on probabilistic graphical models (PGM's).

|

|

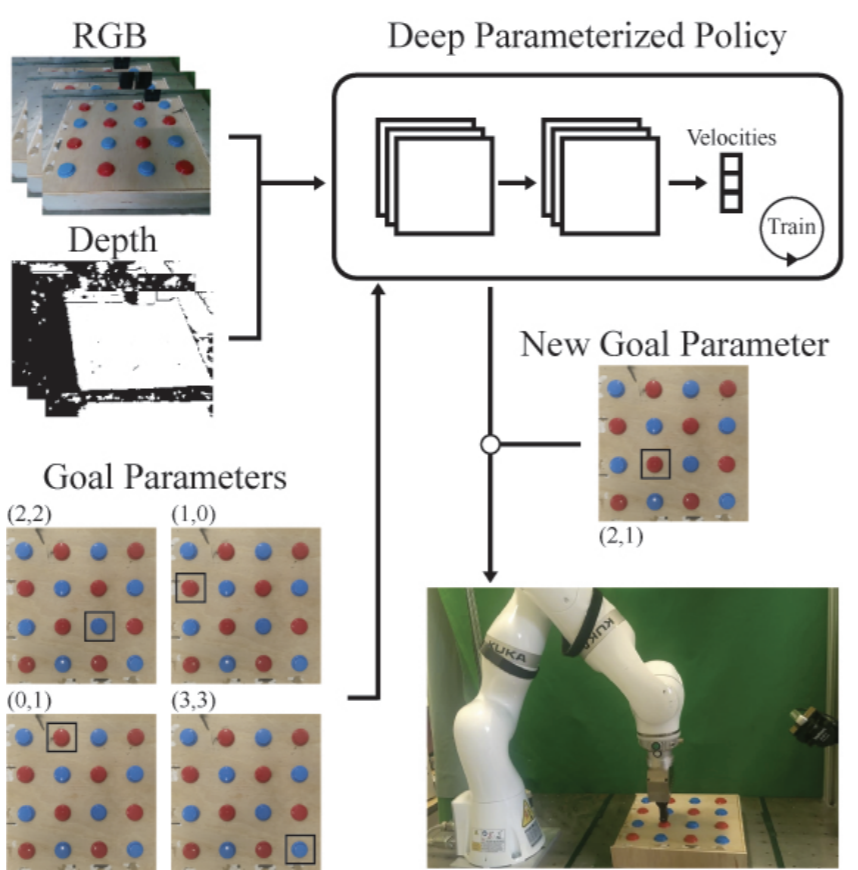

Learning Deep Parameterized Skills from Demonstration for Re-targetable Visuomotor Control Nishanth Kumar*, Jonathan Chang*, Sean Hastings, Aaron Gokaslan, Diego Romeres, Devesh Jha, Daniel Nikovski, George Konidaris, Stefanie TellexarXiv, 2020. arxiv

Shows how the generalization capabilities of Behavior Cloning (BC) can be improved by learning a policy parameterized by some input that enables the agent to distinguish different goals (e.g. different buttons to press in a grid). Includes several exhaustive experiments in simulation and on two different robots.

|

Industry Experience and Research Collaborations

- NVIDIA Research, Seattle, USA.

Working with Caelan Garrett, Fabio Ramos, and Dieter Fox in the Seattle Robotics Lab on combining ideas from TAMP with foundation models for real-world tasks. Stay tuned for more! - The AI Institute, Cambridge, USA.

Worked with Jennifer Barry and many other wonderful researchers on combining planning and learning to enable robots to solve real-world long-horizon tasks. These efforts have resulted in one publication (so far), check it out here! I was also the first ever intern to start at the AI Institute :). - Vicarious AI, Union City, USA.

Worked with Stannis Zhou, Wolfgang Lehrach, and Miguel Lázaro-Gredilla on developing PGMax - an open-source framework for ML with PGM’s. - MERL, Cambridge, USA.

Worked with Diego Romeres, Devesh Jha and Daniel Nikovski on furthering Learning from Demonstration for industrial robots. - Uber ATG Research, Toronto, Canada.

Worked with Sean Segal, Sergio Casas, Wenyuan Zeng, Jingkang Wang, Mengye Ren and others on a project exploring Active Learning for Self-Driving Vehicles that lead to a paper (read it here!). I had the honor of being advised by Prof. Raquel Urtasun.

Invited Talks

- Practice Makes Perfect: Planning to Learn Skill Parameter Policies.

Manipulation and Learning Reading Group at the AI Insitute. March 5, 2024. - Inventing Plannable Abstractions from Demonstrations

Brown Robotics Group. April 28, 2023. - Task Scoping: Generating Task-Specific Abstractions for Planning

MIT LIS Group Meeting. February 12, 2021. - Let’s Talk about AI and Robotics

I was interviewed about my work, experiences and advice on research for an episode of the interSTEM YouTube channel. - Action-Oriented Semantic Maps via Mixed Reality

The Second Ivy-League Undergraduate Research Symposuim (ILURS). Best Plenary Presentation Award.

The University of Pennsylvania. April, 2019. - Building intelligent, collaborative robots

Machine Intelligence Conference 2019

Boston University. September 2019.

Mentorship

I love mentoring students and junior researchers with interests related to my own; I find that this not only leads to cool new papers and ideas, but also helps me become a better researcher and teacher!

Mentees

Current

- Annie Feng (Fall 2023 - Present): working on using Large Language Models (LLM’s) for model-based learning and exploration.

- Alicia Li (Spring 2024 - Present): working at the intersection of planning and deep RL for robotics tasks.

- Jing Cao (mentored jointly with Aidan Curtis)(Spring 2024 - Present): working on combining different Foundation Models together to solve long-horizon robotics tasks via planning.

Past

- Kathryn Le (mentored jointly with Willie McClinton) (IAP 2023 and Spring 2023): worked on using Task and Motion Planning to solve tasks from the BEHAVIOR benchmark; currently continuing undergrad at MIT.

- Anirudh Valiveru (IAP 2023): worked on leveraging Large Language Models (LLM’s) for efficient exploration in relational environments; currently continuing undergrad at MIT and pursuing research with a different group.

- Varun Hariprasad (mentored jointly with Tom Silver)(Summer 2022): high-school student at MIT via the RSI program; currently an undergrad at MIT.

Awards

- Qualcomm Innovation Fellowship Finalist, 2022.

- NSF GRFP Fellow, 2021.

- Berkeley Fellowship (declined), 2021.

- Sigma Xi Inductee, 2021.

- CRA Outstanding Undergraduate Research Award Finalist, 2021.

- Tau Beta Pi Inductee, 2020.

- Heidelberg Laureate, 2020.

- Goldwater Scholarship, 2020.

- CRA Outstanding Undergraduate Research Award Honorable Mention, 2020.

- Karen T. Romer Undergraduate Teaching and Research Award, 2019.

- Best Plenary Presentation, The Second Ivy League Undergraduate Research Symposium, 2019.

Teaching

- Head Teaching Assistant, CSCI 2951-F: Learning and Sequential Decision Making

Brown University, Fall 2019 - Teaching Assistant, ENGN 0031: Honors Intro to Engineering

Brown University School of Engineering, Fall 2018

Selected Press Coverage

- Helping robots practice skills independently to adapt to unfamiliar environments

- Engineering’s Dwyer, Dastin-van Rijn and Kumar Selected as NSF Graduate Research Fellows

- Bawabe, Kumar, Sam, And Walke Receive CRA Outstanding Undergraduate Researcher Honors

- Nishanth Kumar named 2020 Barry M. Goldwater Scholar

- 2020 Barry Goldwater Scholars Include Many Impressive Indian American Researchers

- Undergrad Nishanth Kumar Wins Best Plenary Presentation At ILURS